Quarterly regional GDP: to publish or to pause?

Case study developed in collaboration with Heather Bovill, Deputy Director at the Office for National Statistics (ONS), previously responsible for Surveys and Economic Indicators, and Jon Gough, Assistant Deputy Director for Surveys and Economic Indicators, Office for National Statistics (ONS).

Summary

Quarterly regional Gross Domestic Product (GDP) was developed with the aim of shedding light on subnational economic activity in a more timely manner. However, issues with the methods meant that the estimates were volatile – they often changed significantly after being revised to align with national and annual GDP.

This caused issues for users such as local authorities, who were using the figures to inform local policy-making and funding decisions. But despite their volatility, local stakeholders valued the more timely regional estimates and there was demand for them.

There was a need to review and improve the methods – in particular the aligning (constraining) process to national and annual GDP. But should quarterly regional GDP continue to be published in the meantime? Were the estimates of sufficient quality to feed into decision-making? This case study explores how a team at the ONS had to decide whether to continue publishing the estimates or to pause them, including balancing demand for the figures with concerns around quality, the impact of revisions, and capacity considerations.

What was the problem?

Economic statistics like

Gross Domestic Product (GDP) are important in order to understand the economy and inform funding decisions. It is valuable for local regions when these statistics can shed light on activity in granular geographical areas, and in a timely manner.

To meet this need, the ONS developed a new measure in 2019 – quarterly regional GDP, which provided breakdowns of economic activity by industry as well as region. These estimates (designated as ‘

experimental statistics’ as they were still under development) could be released in a more timely manner than other GDP outputs like annual regional GDP, as they mainly relied on administrative data (Value Added Tax, VAT returns) rather than also survey data (which is more comprehensive but less timely).

These quarterly subnational estimates were also novel in terms of the methods (as well as the underlying data) used to produce them. Because they relied on administrative data (which is less comprehensive than survey data) the ONS team introduced methods to ensure that where possible, when the other measures were published, the quarterly regional estimates were constrained to be aligned with them and tell a consistent story. For example, this involved ensuring that the quarterly regional estimates matched to the quarterly national and annual regional GDP estimates when these became available (and also later when they were revised).

"For some of the regions the revisions were changing the story. The Covid-19 pandemic amplified the impact of the issue – regions were anxious to see how their local economies were recovering but the revisions were changing this picture."

While it is standard to see some changes to published estimates due to revisions – for example, when additional data (like late VAT returns) is added, the team started to notice that revisions to the quarterly regional GDP estimates were larger than is typical. They were regularly changing trends. And importantly, the changes were not primarily due to additional data, but were due to the methods – in particular the constraining process. It was proving challenging to align the quarterly subnational estimates with the two other estimates: each quarter or year when the team carried out the constraining process, the model would align regions in a different way, changing the storyline of their economic growth or decline over time.

Local authorities were using the quarterly regional estimates to track recovery from the Covid-19 pandemic and were feeding them into their own modelling to inform funding decisions (eg which industries might need a boost and planning decisions). When the estimates were revised and this changed the story, local users became concerned – this had implications on the decisions they were using the figures to inform.

The ONS knew that users valued the estimates and there was demand for them. However, they also received complaints from local stakeholders, who voiced their concerns about the impact of the revisions. Despite previous significant development work on the methods, the team were not sure the estimates were of sufficient quality to continue being published.

What was done?

"It was time-consuming to prepare the publications and also explain the data to users in more detail. It would have been unworkable if we tried to review and redevelop simultaneously – we knew it wasn’t going to be a ’quick fix’. It was better to pause, allow the space to do a really deep dive review, reach a better place more quickly, and allow users to also have more confidence in the estimates afterwards. It would have been risky to do both at the same time, and risky to continue publishing when we knew it wasn’t good enough quality."

The ONS team held sessions with some local authorities to talk them through the data and the reasons for the changes. This was a time-intensive process, involving a small team simultaneously working on the publications and also conversing with regions to helping them to interpret the data and its limitations.

The team continued to monitor the situation. It was not clear whether the uncertainty of the economic situation – with recovery from Covid-19 and other economic factors, was impacting the size of the revisions, and whether they may settle over time with increased economic stability.

Eventually, in July 2023, the team decided that the data was not sufficient quality to even be an experimental statistic – the estimates were not providing value to users given the size of the revisions. They decided to pause publication and focus on further redevelopment. The team engaged with users to explain their decision. Users understood that the estimates were low quality, however, they still wanted figures to feed in to their decision-making.

The team also considered if there was any alternative data that the ONS could provide that might be helpful to the local users and go some way to meeting their needs, such as providing raw VAT data as an indicator. However, following some research and discussions, users indicated that this would not meet their needs.

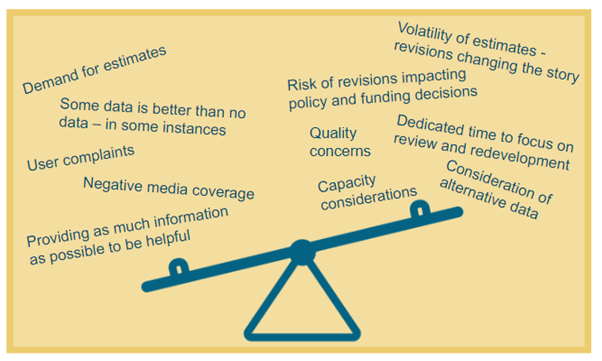

What factors were balanced?

The quarterly regional GDP team had to balance the demand for the figures with the implications of their quality. One the one hand, users were requesting the data, and in many instances data does not need to be perfect in order to usefully inform decisions – sometimes some data can be better than no data.

"We weighed up factors for and against pausing the publication as we went along – until we reached a tipping point. It was a culmination of several aspects. The main factor was that it was risky – decisions were being based on things we knew could change. There had been problems for a while, but the revisions were not settling down. Users were concerned, and we were spending so much time explaining the changes, which we didn’t have capacity to do alongside redevelopment.”

However, in this scenario the key factor was that the revisions to the figures were so large that they were changing the ‘story’. This meant that the data was not usefully informing decisions by providing a ballpark idea of GDP – instead there was a real risk that the GDP estimates were providing an inaccurate impression of the local economy, and could negatively impact the decision-making they were feeding into: decisions were being made based on figures that were liable to change. Users were not happy when figures did change, and the ONS received complaints about the revisions.

When deciding on the best course of action, the team weighed up their options, including undertaking a review of the methods while continuing to deliver the publications, versus pausing publication to focus on development. Here they also had to consider capacity constraints – producing the estimates alongside explaining the data and its limitations to users was time-intensive, and meant there would be less capacity available for implementing changes following the development and improvement work.

Infographic illustrating factors that had to be balanced.

What was the impact of these decisions?

A wide range of local authorities had been unhappy with the volatility of the estimates: all regions were impacted at some time, but smaller regions, and those with a strong contribution from specific industries, were more impacted. In addition, the revisions were attracting media interest, for example with Financial Times coverage on the revisions ‘muddling’ the picture on London’s economic recovery from the pandemic.

Users were also unhappy that the estimates were being paused. They were glad that the methods were being reviewed, but they wanted improvements to be implemented in real-time while estimates continued to be published (even though they recognised that the quality and the volatility of the revisions with the current approach was not acceptable to them).

As well as consulting with users, the ONS team consulted with stakeholders across government statistics, for example the Office for Statistics Regulation (OSR). Senior statisticians from across government agreed that the estimates should be paused. The team were satisfied that they were taking the correct decision in order to eliminate the risk of unreliable estimates being used to inform decisions, potentially later leading to negative impacts if those estimates changed.

What are the key learnings?

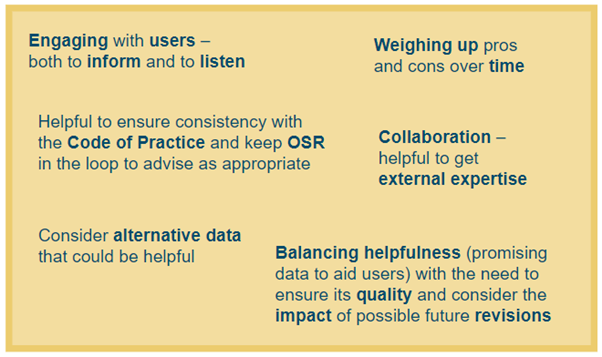

The team reflected on the necessity to weigh up the benefits and risks of publishing quarterly regional GDP over time as the situation progressed. Could they have paused publication earlier? At the beginning, the team had wanted to be helpful and get the new data out, consistently and transparently, as quickly as possible. It was not known how volatile the estimates would be, or if they would stabilise with time. As the situation progressed, the volatility of the estimates became clear and users were reporting many concerns, demonstrating that they were not adding value. Time was needed to evaluate the quality of the estimates and the implications of this.

Key to this process was engaging with users – both to understand their perspective and to keep them informed. The ONS local teams were in close contact with local authorities, and could feed back to the central teams. Care was taken throughout the process – from explaining the limitations with the estimates and the ongoing development work, to providing advanced notice and explanations following the decision to pause publication. Understanding how users benefitted from the estimates, as well as what their concerns were, was crucial to informing the decisions over whether to continue or pause publication.

This case study also illustrates the importance of collaboration and seeking external expertise where necessary. The ONS is now working with experts in regional statistics at the Economic Statistics Centre of Excellence (ESCoE) to try to improve the stability of the estimates. The planned improvement work involves reviewing the constraining approaches, methods, data sources and publication pattern, and holding discussions with users to ensure their views are fully understood.

Infographic illustrating key learnings.

Resources